Backgroud

I was stuck at a question from a convex analysis book for more than a week. I couldn’t directly find its solution.

Let $K$ be a convex cone in $\R^n$, $p \ge 1$. The function $f: K \to [0,\infty)$ is homogeneous of degree $p$, i.e.

\[ f(\lambda x) = \lambda^p f(x),\quad \forall x \in K, \forall \lambda \ge 0. \]

Suppose also that $f(x) > 0 \,\forall x \in K \setminus \{x\}$. Show that $g(x) := [f(x)]^{\frac1p}$ is convex on K.

Resolution

I saw a proof for the Minkowski inequality using the convexity of the function $x \mapsto x^p$ on the webpage for a real analysis course. In fact, by replacing $\norm{\cdot}$ with $g(\cdot)$ in the proof of Theorem 7, we’re done.

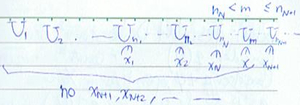

First, observe that

Therefore, it suffices to show that

\begin{equation} g(x + y) \le g(x) + g(y). \label{goal} \end{equation}

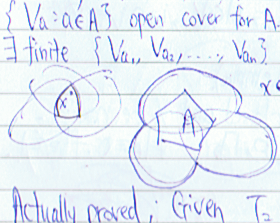

The assumption $\norm{\vect{u}}_p = \norm{\vect{v}}_p = 1$ can be changed to $g(x) = g(y) = 1$. Then we’ve $f(x) = f(y) = 1$. By the convexity of $f$ on $K$, $\forall x,y \in K, \forall \lambda \in [0,1]$,

Since it’s given that $f(x) \ge 0 \,\forall x \in K$, we can say that

\begin{equation} g(\lambda x + (1 - \lambda) y) \le 1. \label{trick} \end{equation}

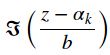

Finally, for any $u,v \in K$, we let

Making use of \eqref{homo}, we substitute \eqref{coeff} into \eqref{trick}.

We get \eqref{goal} at last.